CPM2

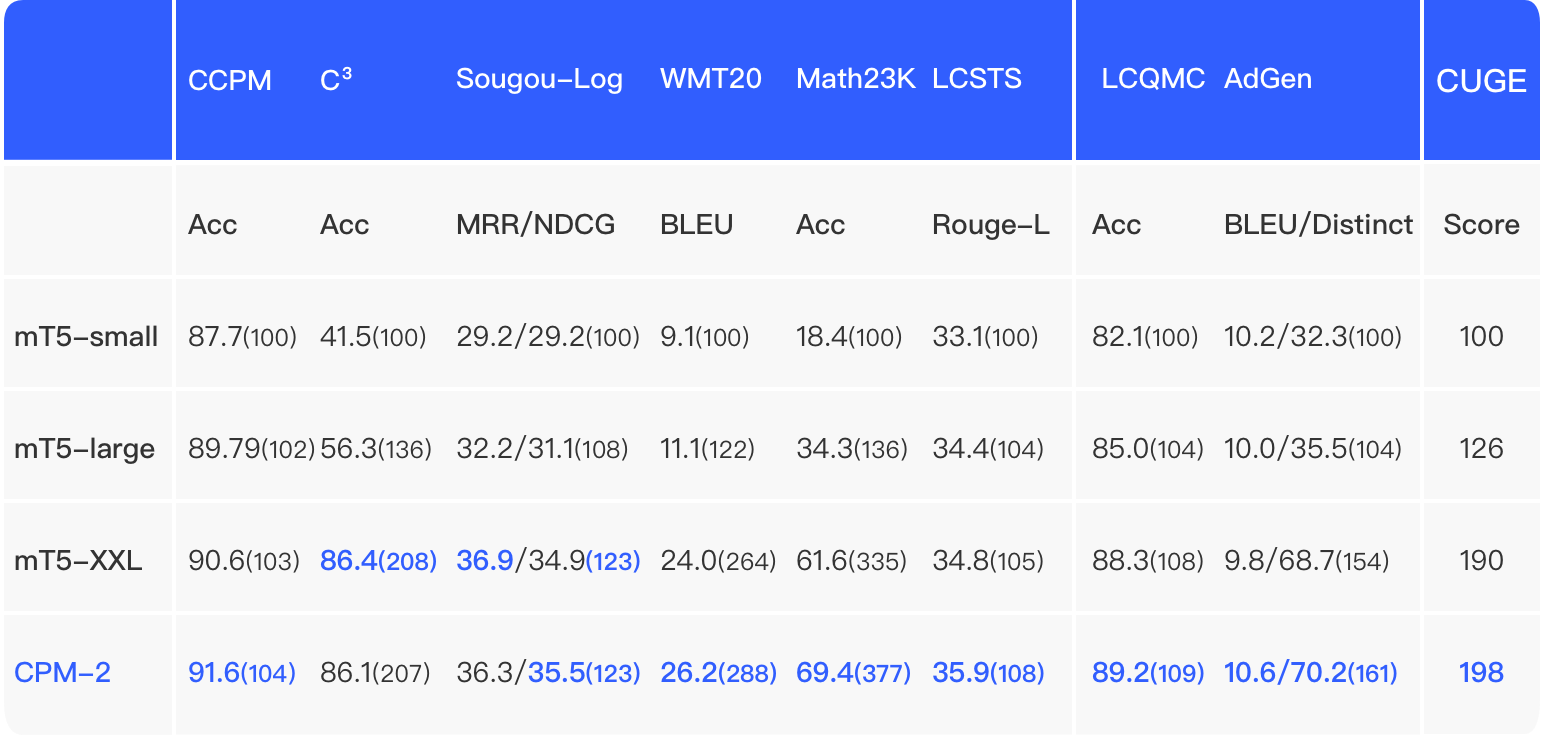

CPM2 is a general bilingual pretrained language model with 11 billiion parameters.

CPM2 is based on the encoder-decoder architecture and has 7 general language capabilities. An updated version CPM2.1 was published on November, 2021. CPM2.1 introduces a generative pre-training task and is trained via the continual learning paradigm, the generation ability has been enhanced.

CPM2 is based on the encoder-decoder architecture and has 7 general language capabilities. An updated version CPM2.1 was published on November, 2021. CPM2.1 introduces a generative pre-training task and is trained via the continual learning paradigm, the generation ability has been enhanced.

GitHub

GitHub License