EVA

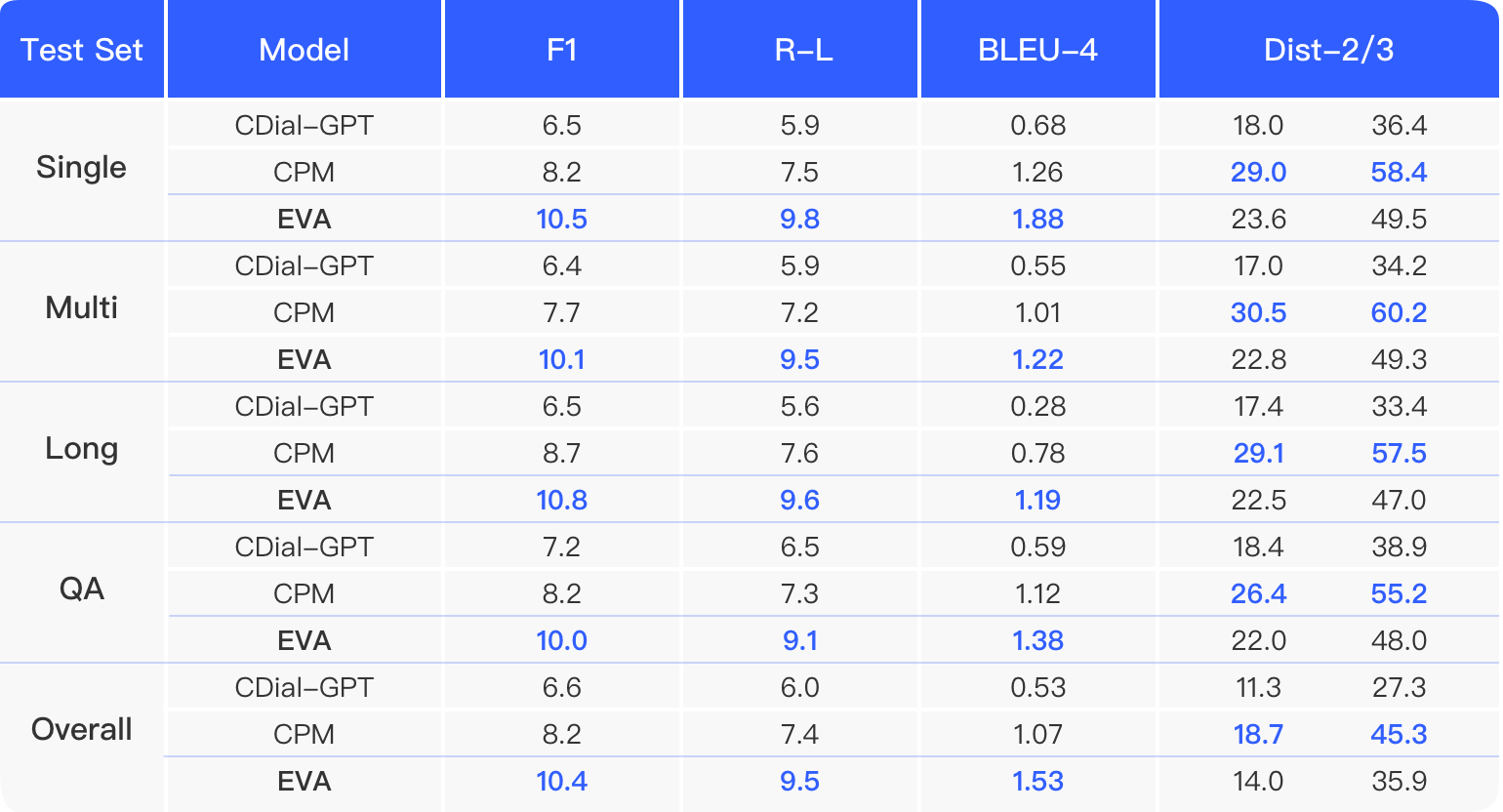

EVA is a Chinese pre-trained dialogue model with 2.8 billion parameters.

EVA is based on the encoder-decoder architecture and it performs well on many dialogue tasks, especially in the multi-turn interaction of human-bot conversations.

EVA is based on the encoder-decoder architecture and it performs well on many dialogue tasks, especially in the multi-turn interaction of human-bot conversations.

GitHub

GitHub License