BMInf

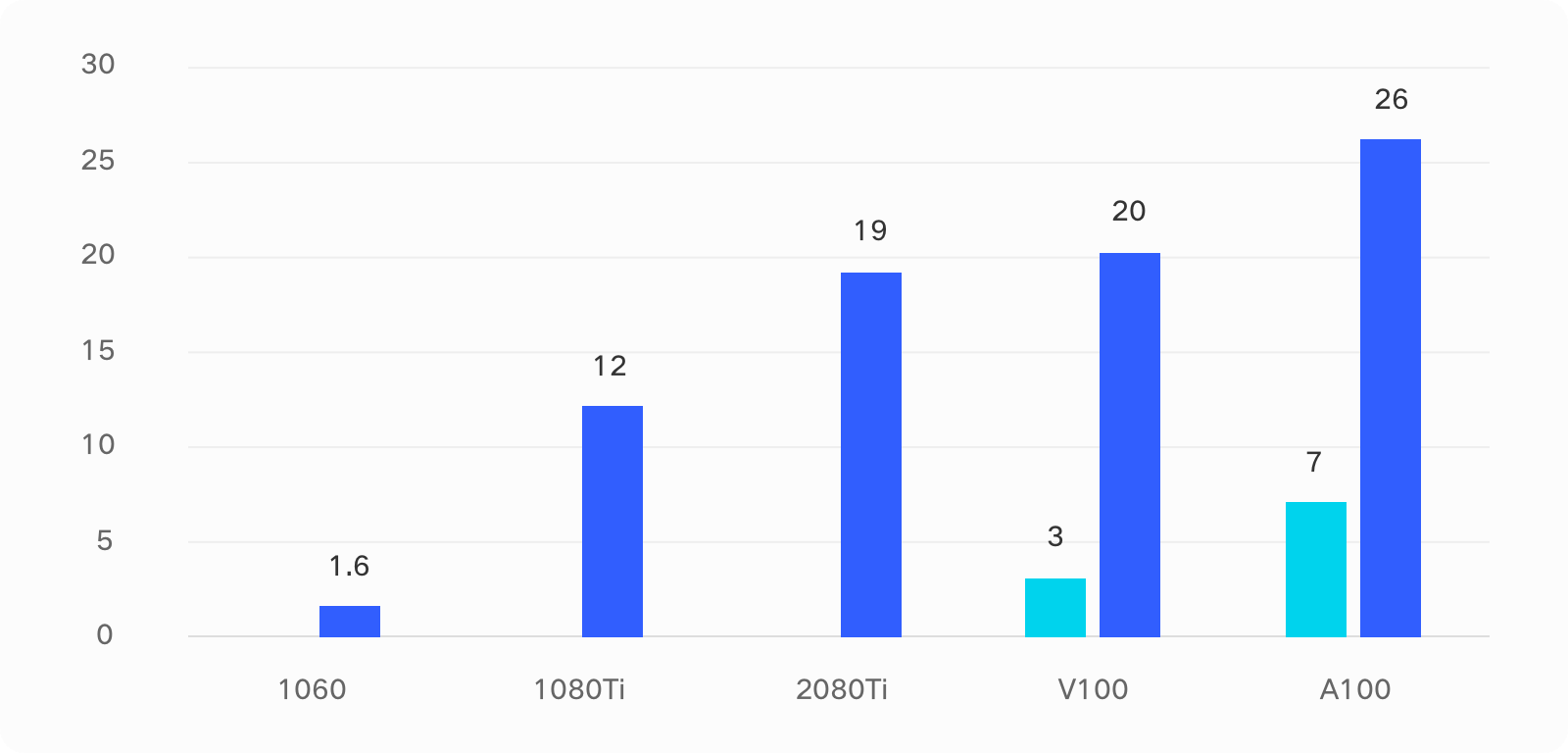

Perform big model inference on a thousand-yuan GPU. BMInf performs low-cost and high-efficiency inference for big models,which can perform big model inference with more than 10 billion parameters on a single thousand-yuan GPU (GTX 1060).

GitHub

GitHub Doc

Share